DOME MicroDataCenter

A microDataCenter contains compute, storage, power, cooling and networking in a very small volume, sometimes also called a "DataCenter-in-a-box". The term has been used to describe various incarnations of this idea over the past 20 years. Late 2017 a very tightly integrated version was shown at SuperComputing conference 2017: the DOME microDataCenter.[1] Key features are its hot-watercooling, fully solid-state and being built with commodity components and standards only.

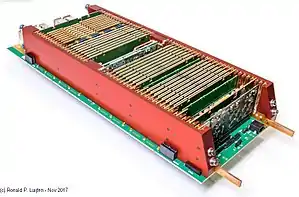

| microDataCenter 32-way carrier | |

|---|---|

| |

| fully populated DOME microDataCenter as shown at SC17, Denver, Nov 2017, containing 24 server and 8 FPGA boards, 10GbE switch, storage, power and cooling - picture shows half of the 2U-rack unit |

DOME project

DOME is a Dutch government-funded project between IBM and ASTRON in form of a public–private partnership to develop technology roadmaps targeting the Square Kilometer Array (SKA), the world's largest planned radio telescope.[2][3] It will be built in Australia and South Africa during the late 2010s and early 2020s. One of the 7 DOME projects is MicroDataCenter (previously called Microservers) that are small, inexpensive and computationally efficient.[4]

The goal for the MicroDataCenter is the capability to be used both near the SKA antennas to do early processing of the data, and inside much larger supercomputers that will do the big data analysis. These servers can be deployed in very large numbers and in environmentally extreme locations such as in deserts where the antennas will be located and not in only in cooled datacenters.

A common misconception is that microservers offer only low performance. This is caused by the first microservers being based on Atoms or early 32bit ARM cores. The aim of the DOME MicroDataCenter project is to deliver high performance at low cost and low power. A key characteristic of a MicroDataCenter is its packaging: very small form factor that allows short communication distances. This is based on using Microservers, eliminating all unnecessary components by integrating as much as possible from the traditional compute server into a single SoC (Server on a chip). A microserver will not deliver the highest possible single-thread performance, instead, it offers an energy optimized design point at medium-high delivered performance. In 2015, several high performance SoCs start appearing on the market, late 2016 a wider choice is available, such as Qualcomms Hydra.[5]

At server level, the 28 nm T4240 based microserver card offers twice the operations per Joule compared to an energy optimized 22 nm Finfet XEON-E3 1230Lv3 based server, while delivering 40% more aggregate performance. The comparison is at server board and not at chip level.[6]

Design

In 2012 a team at IBM Research Zürich led by Ronald P. Luijten started pursuing a very computational dense, and energy efficient 64-bit computer design based on commodity components, running Linux.[7][8] A system-on-chip (SoC) design where most necessary components would fit on a single chip would fit these goals best, and a definition of "microserver" emerged where essentially a complete motherboard (except RAM, boot flash and power conversion circuits) would fit on chip. ARM, x86 and Power ISA-based solutions were investigated and a solution based on Freescale's Power ISA-based dual core P5020 / quad core P5040 processor came out on top at the time of decision in 2012.

The concept is similar to IBM's Blue Gene supercomputers but the DOME microserver is designed around off the shelf components and will run standard operating systems and protocols, to keep development and component costs down.[9]

The complete microserver is based on the same form factor as standard FB-DIMM socket. The idea is to fit 128 of these compute cards within a 19" rack 2U drawer together with network switchboards for external storage and communication. Cooling will be provided via the Aquasar hot water cooling solution pioneered by the SuperMUC supercomputer in Germany.[10]

The designs of the first prototype was released to the DOME user community on July 3, 2014. The P5040 SoC chip, 16 GB of DRAM and a few control chips (such as the PSoC 3 from Cypress used for monitoring, debugging and booting) comprise a complete compute node with the physical dimensions of 133×55 mm. The card's pins are used for a SATA, five Gbit and two 10 Gbit Ethernet ports, one SD card interface, one USB 2 interface, and power. The compute card operates within a 35 W power envelope with headroom up to 70 W. The bill of materials is around $500 for the single prototype.[7][8][9][11][12]

Late 2013 a new SoC was chosen for the second prototype. Freescale's newer 12 core / full 24 thread T4240 is significantly more powerful and operates within a comparable power envelope to the P5040 at 43W TDP. This new micro server card offers 24 GB DRAM, and be powered as well as cooled from the copper heatspreader. It is being built and validated for the larger scale deployment in the full 2U drawer in early 2017. To support native 10 GbE signalling, the DIMM connector was replaced with the SPD08 connector.

Late 2016, the production version of the T4240 based microserver card was completed. Using the same form factor and the same connector (and thus plug compatible) a second server prototype board based on the NXP (Formerly Freescale) LS2088A SoC (with 8 A72 ARMv8 cores) was completed around the same time.[9][12][13][14]

History

| microDataCenter production version | |

|---|---|

.jpg.webp) | |

| 32-way carrier with 24 T4240ZMS servers and 8 FPGA cards |

The smallest form factor micro data center technology was pioneered by the DOME micro server team in Zurich. The computing consists of multiple Microservers and the networking consists of at least one Micro switch module. The first live demo of an 8-way prototype system based on P5040ZMS was performed at Supercomputing 2015 as part of the emerging technologies display,[15] followed by a live demo at CeBIT in March 2016. 8 Way HPL was demonstrated at CeBIT, hence named 'LinPack-in-a-shoebox'.

In 2017 the team finished the production ready version that contains 64 T4240ZMS servers, two 10/40 GbE switches, storage, power and cooling in a 2U rack unit. The picture on lowest right shows the 32-way carrier (half of the 2U rack unit) populated with 24 T4240ZMS servers, 8 FPGA boards, switch, storage power and cooling. This technology increases density 20-fold compared to traditionally packaged datacenter technology while delivering same aggregate performance. This is achieved by a new top-down design, minimizing component count, using an SoC instead of traditional CPU and dense packaging enabled by the use of hot-water cooling.[16]

Future

A startup company - still in stealth mode - is in the process of obtaining a technology licence from IBM to bring the technology to market 1H 2018.

Unfortunately, the startup company was unable to obtain seed funding to start productization. This project, including all resources, has been mothballed and Ronald retired from the Zurich research lab. (August 2020)

References

- Tom's Hardware on Sc17, goto slide 27

- ASTRON & IBM Center for Exascale Technology

- Square Kilometer Array: Ultimate Big Data Challenge

- ASTRON & IBM Center for Exascale Technology - Microservers

- "Quallcomm Hydra". Archived from the original on 2017-04-17. Retrieved 2017-04-16.

- [“Energy-Efficient Microserver Based on a 12-Core 1.8GHz 188K-CoreMark 28nm Bulk CMOS 64b SoC for Big-Data Applications with 159GB/s/L Memory Bandwidth System Density”, R.Luijten et al., ISSCC15, San Francisco, Feb 2015]

- "IBM high-density μServer demonstration platform leveraging PPC, Linux and hot-water cooling" (PDF). Archived from the original (PDF) on 2014-07-14. Retrieved 2014-07-02.

- Big Science, Tiny Microservers: IBM Research Pushes 64-Bit Possibilities

- IBM DOME Microserver Could Appeal To Enterprises

- IBM/ASTRON DOME 64-bit Microserver

- "The IBM-ASTRON 64bit μServer demonstrator for SKA" (PDF). Archived from the original (PDF) on 2014-07-14. Retrieved 2014-07-02.

- "The IBM and ASTRON 64bit μServer for DOME". Archived from the original on 2014-07-14. Retrieved 2014-07-03.

- NLeSC signs DOME agreement with IBM and ASTRON

- IBM to present the incredible shrinking supercomputer

- "DOME Hot-Water Cooled Multinode 64 Bit Microserver Cluster". Archived from the original on 2016-01-27. Retrieved 2017-12-05.

- DOME microDataCenter project